If you are using CSV, Excel, or Markdown spreadsheets, you may encounter duplicate rows. This can happen if you have manually entered the same data or imported duplicates from other sources.

Whatever the reason, removing duplicate rows is an important part of data cleaning. In this article, we will cover several ways to quickly remove duplicate rows from CSV, Excel, and Markdown spreadsheets.

1. Online spreadsheet tool (recommended)

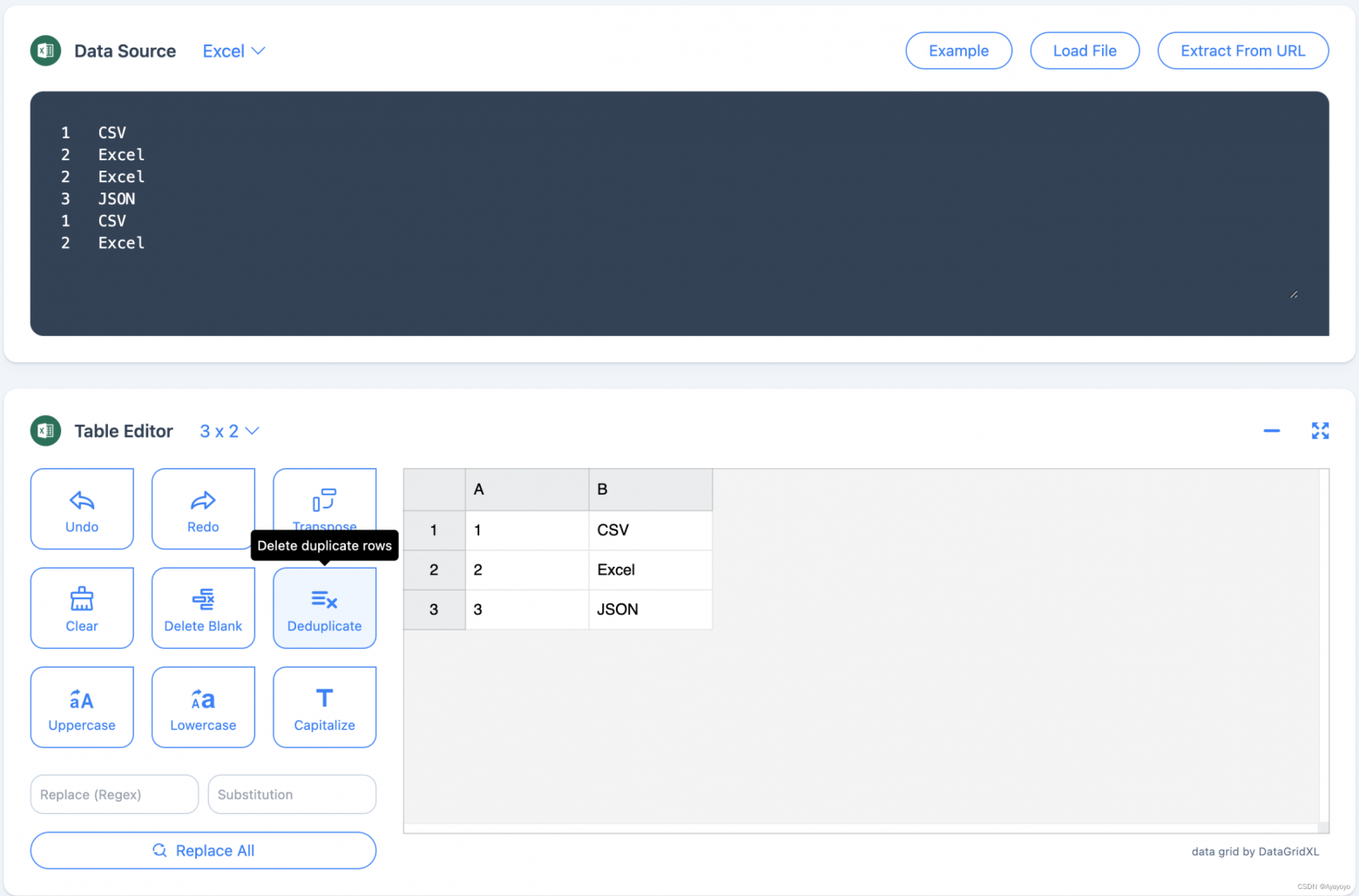

You can use an online tool called “TableConvert” to remove duplicate rows. With this tool, you can easily check and remove duplicate rows in your CSV, Excel and Markdown tables.

Just open your browser and go to https://tableconvert.com/excel-to-excel, paste or upload your data and click “Deduplicate” in the table editor. It’s quick and easy. Take a look at the image below:

2. Removing duplicate rows in Excel

Removing duplicate rows in Excel is very simple. First, open the Excel file and select the column where you want to check for duplicate rows.

Then click on the “Data” menu and select “Remove Duplicates”. Excel will show a dialog box where you need to select the columns to remove duplicates. Click “OK” and Excel will remove all duplicate rows.

3. Removing duplicate lines in CSV using Python

If your data is saved in a CSV file, you can use Python to remove duplicate rows. First, install the pandas library. Then use the following code to read the CSV file, remove duplicate lines, and save the clean data back to the file:

import pandas as pd

data = pd.read_csv("your_file.csv")

data = data.drop_duplicates()

data.to_csv("your_file.csv", index=False)This code reads the CSV file, removes duplicate lines, and writes the clean data back to the original file.